Candu.ai

Challenge

Candu needed to expand its appeal to enterprise customers and encourage existing clients to grow their accounts. Research revealed that while customers valued Candu’s ability to create and iterate content quickly without engineering support, the platform lacked advanced analytics and A/B testing capabilities that larger organisations required to justify investment and demonstrate impact.

My Impact

I led research and design across the full end-to-end process, from discovery through delivery. I conducted customer and prospect interviews to uncover unmet needs, ran competitor analysis to identify market gaps, and designed, prototyped, and tested a no-code A/B testing feature for non-technical users. I also ensured alignment with engineering and maintained the design system throughout.

Solution

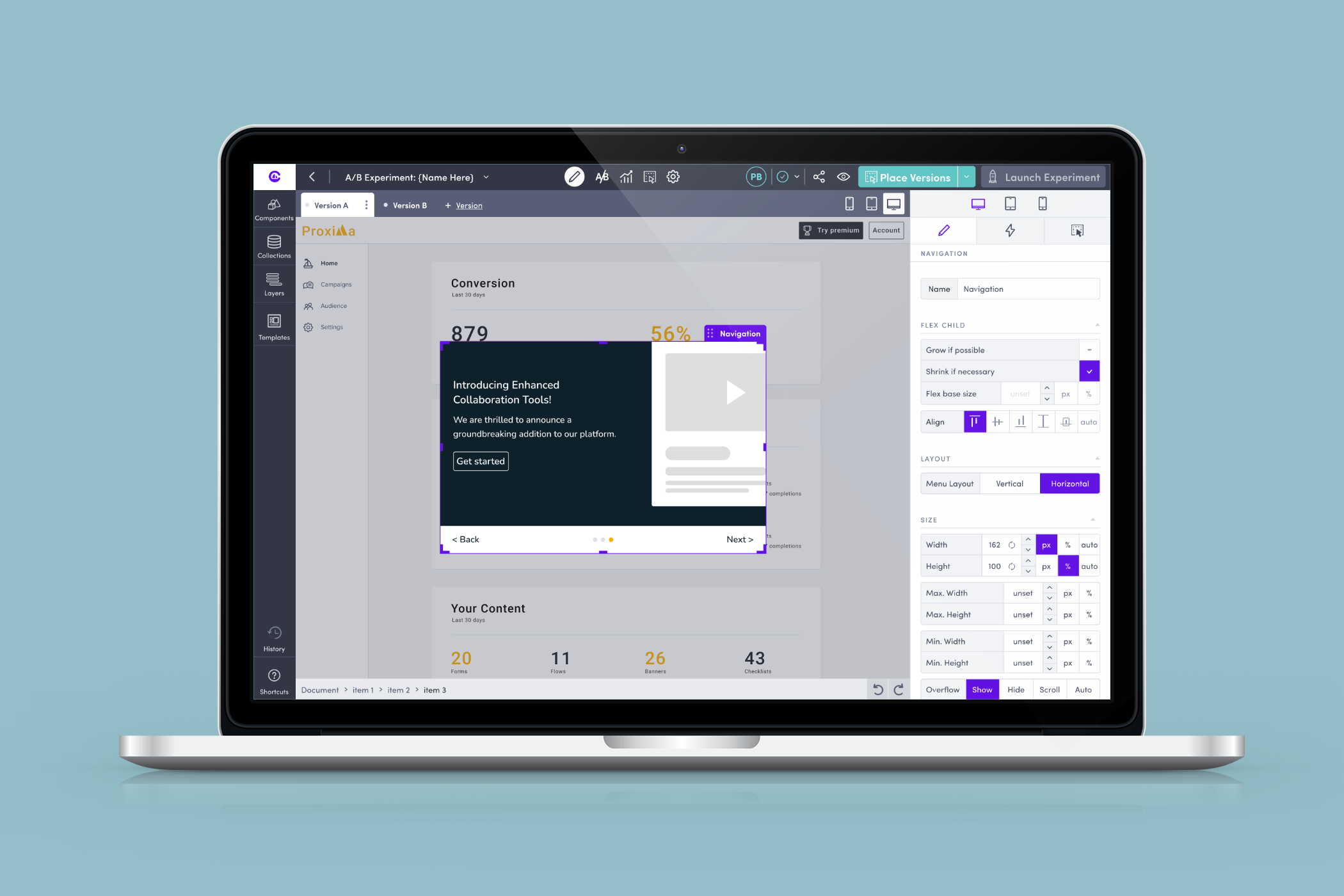

I designed an A/B testing feature that made experimentation simple and accessible to product and marketing teams without requiring engineering support. Customers could create content variants, define goals, and automatically distribute audiences, while clear result summaries surfaced key insights without statistical complexity. This feature strengthened Candu’s value proposition for enterprise clients and supported expansion into new markets.

Process

User Research

Uncovering Pain Points & Opportunities

To identify opportunities for expanding Candu’s appeal and securing larger enterprise contracts, I began by surveying our existing customers. The survey explored how Candu could better serve other teams within their organisations and ranked features they wished to see. I also conducted interviews with sales prospects who opted for competitor tools, aiming to understand their decision-making factors.

Key findings from this research included:

Candu is most useful to customers as a tool for creating content fast, without engineering resources, which enables rapid experimentation and iteration.

Candu already allowed customers to create and iterate fast. What was missing was the advanced content analytics, and A/B testing needed to convince leadership within customers’ organisations.

More data-oriented departments, such as marketing, would need more advanced content and Candu’s strength lies in its ability to enable rapid content creation and iteration without engineering resources.

While Candu supports fast creation and iteration, it lacked advanced content analytics and A/B testing capabilities necessary to convince leadership within customers' organisations.

Data-oriented departments, such as marketing, required more advanced content and experimentation analytics.

Candu needed to offer a user-friendly A/B testing solution for non-technical users, unlike tools such as LaunchDarkly, which require engineering support.

Following these insights, I conducted additional interviews with Candu’s customers to delve deeper into their existing tools, workflows, and challenges with A/B testing.

The research uncovered several key insights:

A/B testing is too time-consuming for our customers as it involves engineering time and engineering resources are limited in most organisations;

Many companies prioritise new features or bug fixes over A/B testing, leaving it underutilised.

Product managers and designers want to run A/B tests independently without requiring engineering support.

Users want the ability to test different types of content, such as pop-ups, modals, and inline.

Participants want to A/B test content effectiveness against activation and retention metrics.

“As onboarding PM [product manager], I’m constantly thinking about new things we can test to learn more about whether they impact key metrics. ”

“It takes too much effort for us to AB test today so we avoid it.”

Market & Competitor Analysis

Defining Our Edge

Alongside the user research, I conducted a comprehensive market and competitor analysis. I evaluated competitors and other A/B testing platforms based on their feature set, analytics capabilities, ease of integration, and the level of engineering input required. This analysis provided insights into the essential features customers expect and where Candu could differentiate, particularly by offering a no-code, easy-to-use solution.

UX Flows

Designing for Simplicity

Using the insights from user research and competitor analysis, we created UX flows for the A/B testing feature. Given the complexity of A/B testing in terms of content design, test settings, and system behaviour in different states (draft, live, ended), we mapped out the UX flows in Whimsical. These flows were reviewed with engineering to assess feasibility within Candu’s existing architecture.

I then moved to wireframes and detailed UX flows in Figma, before creating prototypes for user testing.

Prototyping

Testing Different Approaches

From the UX flows, I created two initial prototypes in Figma for user testing. These prototypes focused on different approaches to setting up and distributing audience segments across A/B test variants.

Prototype A offered greater control over audience distribution but required more user input.

Prototype B simplified the process by automating audience distribution across variants, with a focus on ease of use.

The prototypes also explored different UI placements for experimental settings and used distinct colour hierarchies to guide users through the process. After initial testing, I developed a final prototype, incorporating feedback for another round of testing.

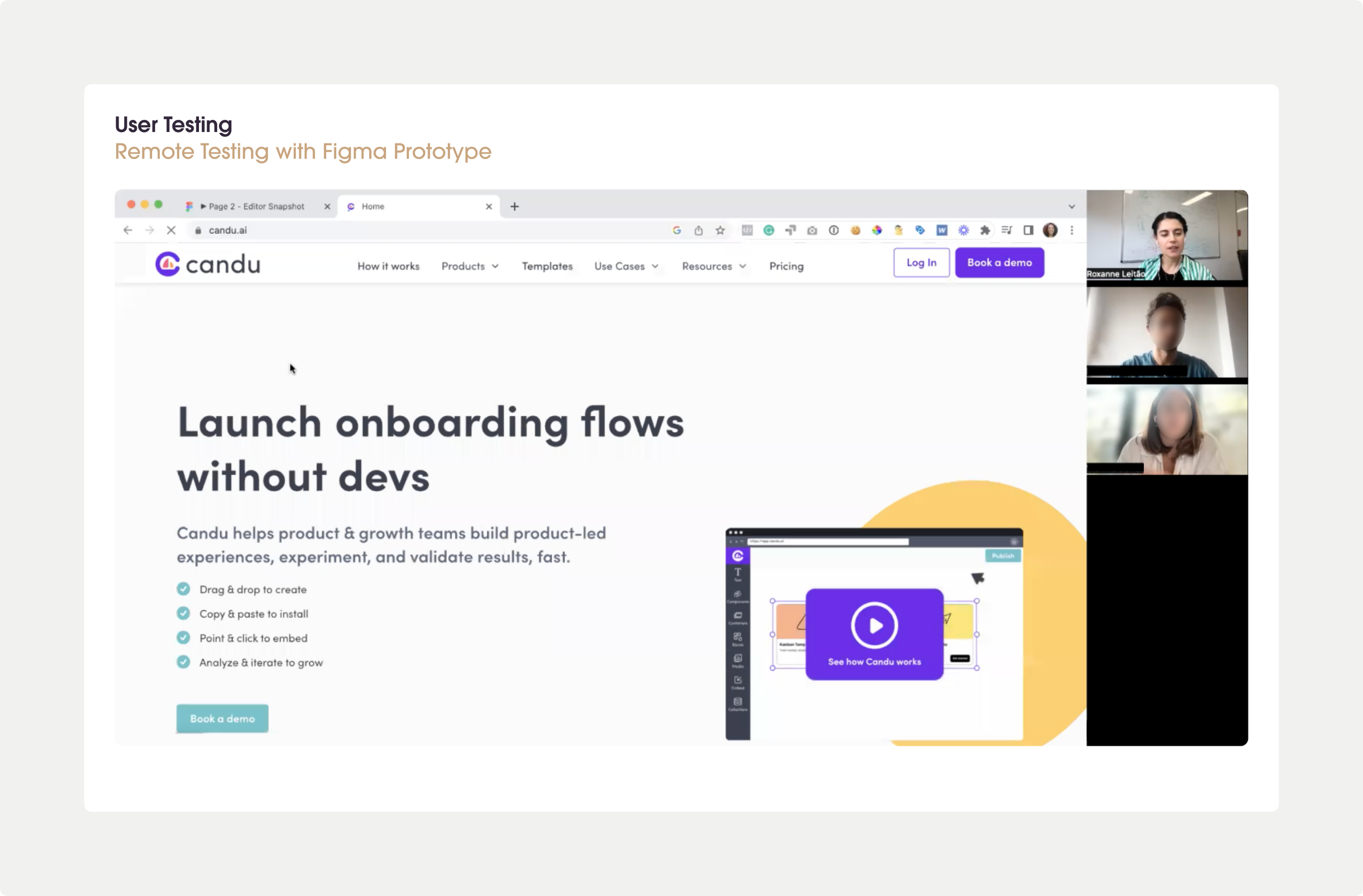

Figma prototypes built for user testing

User Testing

Iterating Towards the Ideal Solution

I conducted two rounds of user testing, recruiting product managers from B2B and B2C SaaS organisations. All sessions were run remotely, and findings from the first round of testing informed the design of a third prototype, which was then tested again.

Key findings from user testing:

Participants preferred creating content for each variant before defining experiment settings such as audience, goals, and timing.

They expected the platform to automatically calculate audience size, significance scores, and confidence intervals.

Participants expect to create an A/B test audience from their existing customer segments;

Simplicity was valued, with participants preferring automated audience distribution over manual adjustments, despite the former allowing for greater customisation.

A replay of the initial setup on the results screen helped users recall key details, such as sample size, goals, and start/end dates.

Users wanted the platform to clearly indicate which variant performed best without needing to interpret complex statistical data.

“Yeah. I think this is more like it. This is much more clear to me. Because these have only one percentage point. First, I choose the segments. It will divide the segments into 2 versions A and B and I will set the percentage for each or only for one and it will add up to 100.”

“I actually prefer the reduced granularity of control because it’s a lot simpler to use.”

“What would be good to see too [on the results screen] is like: what was the initial target we set up?”

Final Design, Handover & Quality Assurance

The final design was created in Figma, with regular touchpoints between the design and engineering teams to ensure feasibility and alignment. I facilitated handover meetings, where any remaining details were finalised, and Jira tickets were created and linked to the relevant Figma screens. As the development cycle progressed, I remained involved in supporting the engineering team with quality assurance and testing, ensuring the A/B testing feature was built to spec and delivered a seamless user experience.

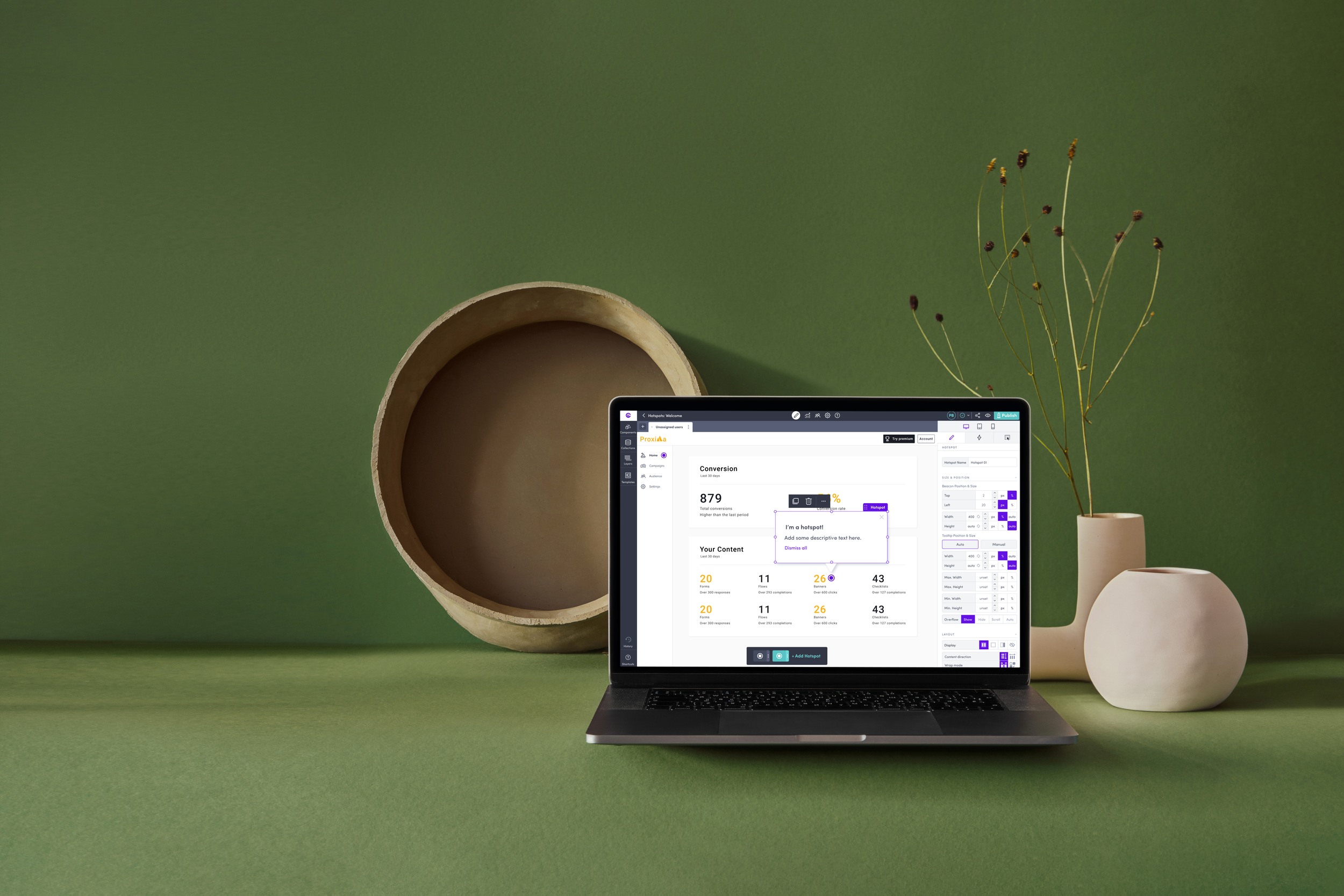

Final UI designs for the Candu A/B Experimentation feature

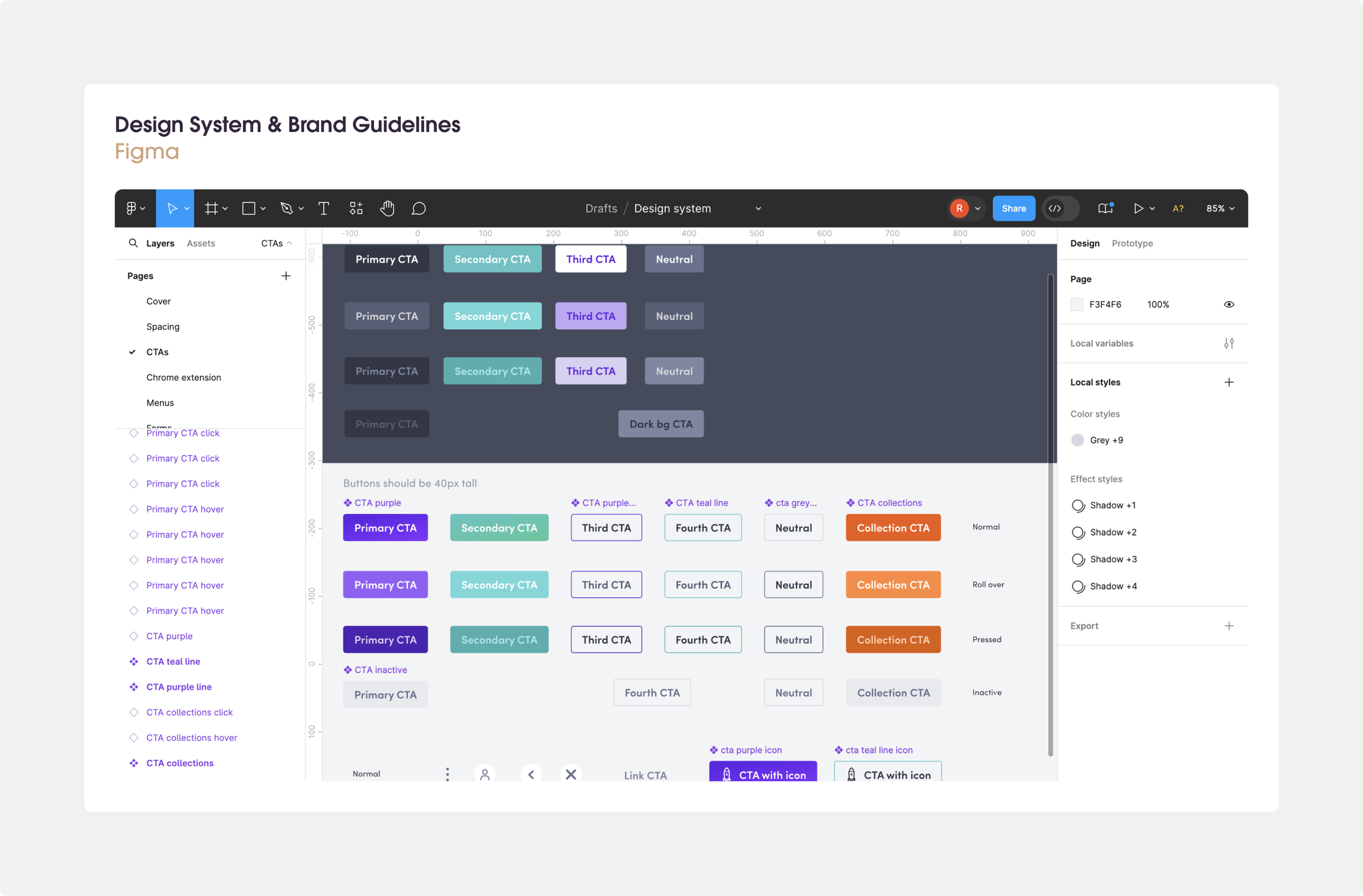

Updating the Candu Design System

As part of every project, I updated the Candu design system to align with the live product and ensure consistency with engineering components. I am responsible for maintaining this design system and onboarding junior designers to its use.