Refuge

Challenge

My research revealed that victims of domestic abuse were often being tracked or monitored through their digital devices without realising it. Many did not understand privacy settings, how to check data sharing, or how to regain control of compromised accounts and devices. I set out to design a safe, anonymous way for survivors to access support whenever and wherever they could.

My Impact

As lead designer and researcher, I worked directly with survivors and NGO staff to understand the realities of technology-facilitated abuse and co-design potential solutions. I facilitated workshops, developed and tested prototypes, and proposed the creation of a privacy and security chatbot. I later worked with Refuge.org.uk to refine and implement it. The chatbot has since been translated into four languages and continues to help survivors protect their digital privacy.

I've discussed this project on Slate's IF/THEN and BBC's Digital Human podcasts, as well as in Wired.

You can also find more information here.

Solution

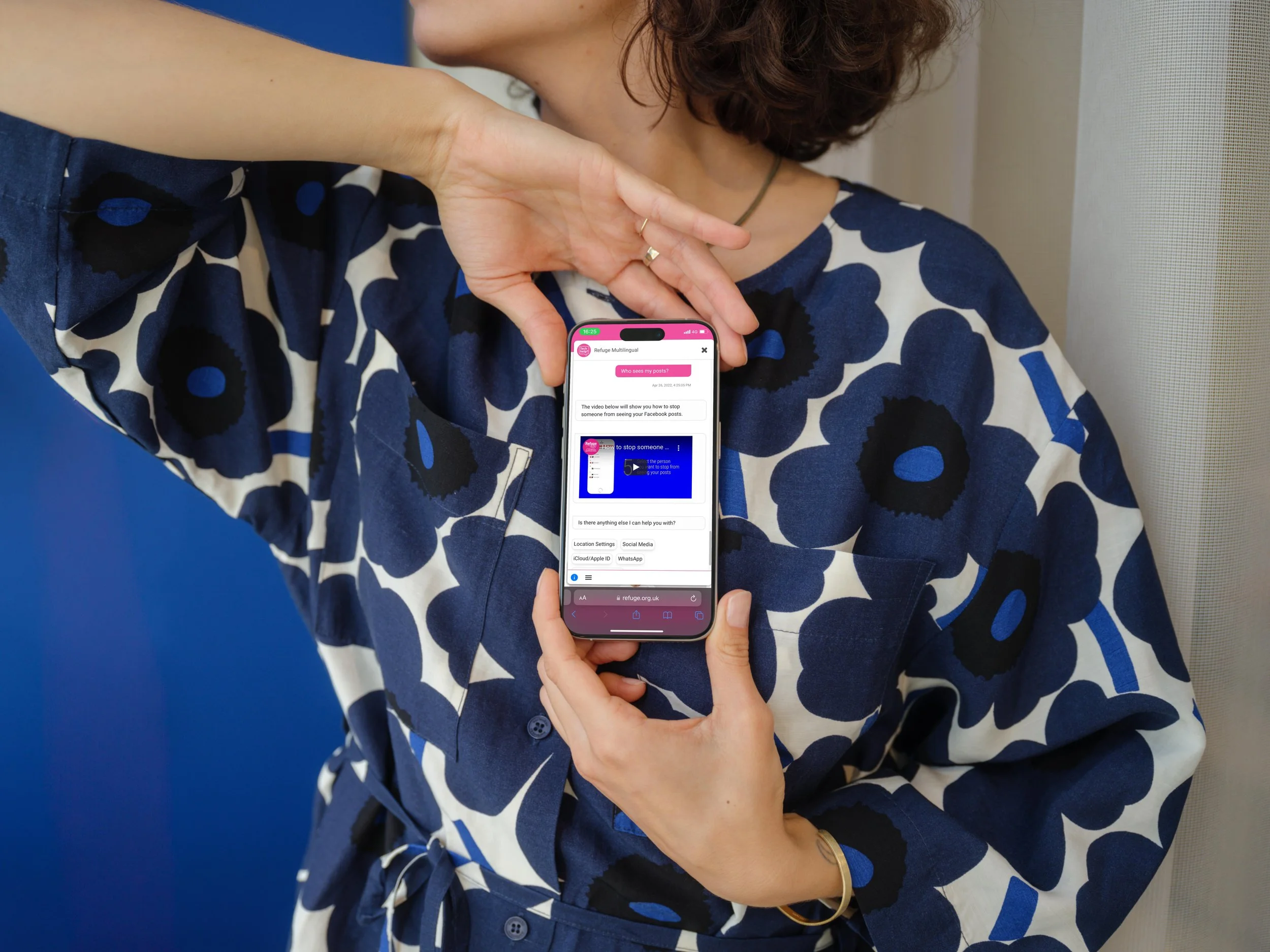

I designed and built a chatbot that provides anonymous, step-by-step guidance for checking and managing privacy and security settings across apps, devices, and social media. It uses short, accessible videos that can be safely followed on personal phones. Testing with survivors led to key refinements, such as slower pacing and clearer visuals. The final product offers discreet, on-demand support for people experiencing digital surveillance and has been featured by the BBC, Slate, and Wired for its impact.

Role Designer & Researcher | Year 2019-2020

Process

User Research

Understanding the Scope of Digital Surveillance

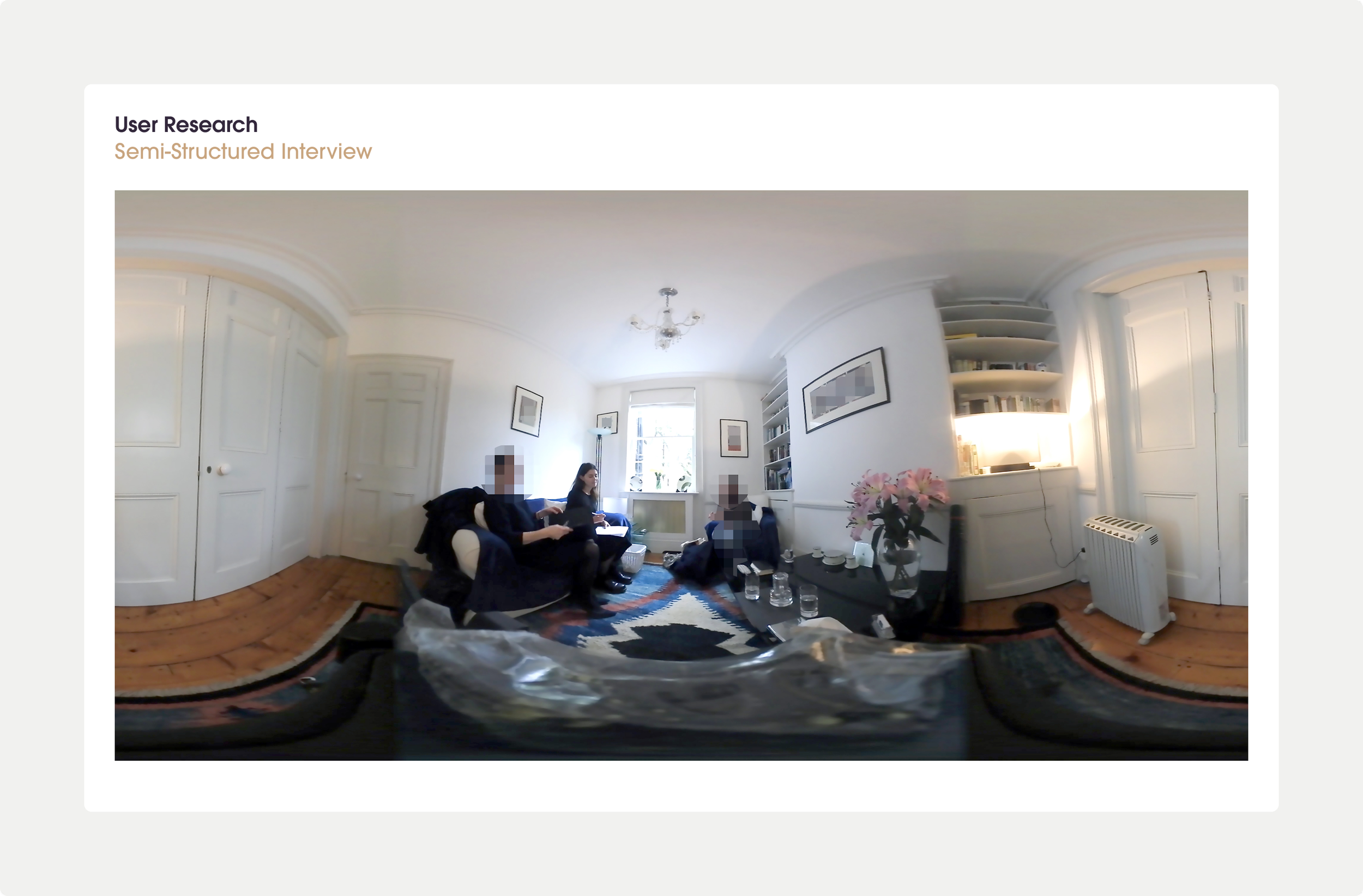

Data shows that over 72% of domestic abuse cases in the UK involve digital surveillance by the perpetrator. In the first phase of the project, I conducted semi-structured interviews with survivors and professional support workers from various NGOs. The interviews with survivors were conducted in person, with a trained therapist present to ensure emotional safety.

Through thematic analysis, several key issues emerged:

Many victims do not understand the privacy settings on their devices, apps, or social media accounts.

Victims often don’t know how to check whether their location is being shared or who has access to their data.

Wearables are increasingly being used to track victims' locations around the clock.

Smart home devices, initially installed to enhance security or monitor pets, are being exploited for surveillance.

One participant highlighted the extent of this intrusion:

“My partner installed Zoemob [a family locator app] on my phone. I immediately lost all my privacy. It was the perfect tool to perpetrate abuse. Although these apps are extremely invasive, they do not seem to break any laws.”

Co-Design Workshops

Crafting Solutions with Survivors

To address the findings from the research, I facilitated a series of co-design workshops involving over 70 participants, including survivors and NGO staff. These workshops, held over several months, encouraged participants to explore domestic abuse within the context of digital privacy and security. Activities included scenario creation, exercises that asked participants to think from the perpetrator’s perspective, and brainstorming sessions to generate ideas for improving digital privacy through devices, apps, and social media.

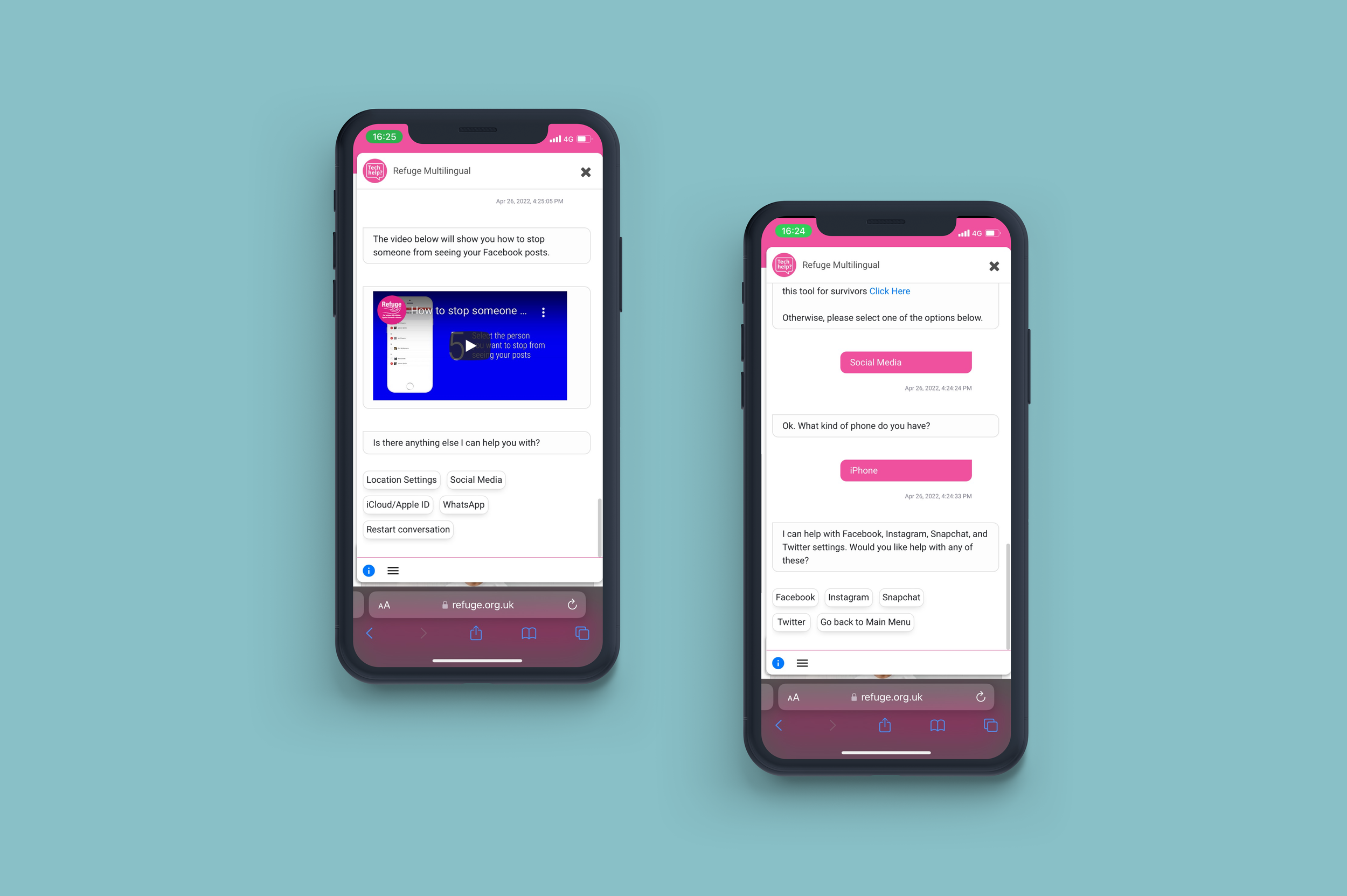

After capturing all the insights and ideas, we prioritised them with senior leadership from Refuge based on potential impact and development time. The decision was made to build a chatbot that could provide anonymous, on-demand support, helping victims manage their privacy and security settings. The chatbot would offer guidance through short instructional videos, showing users how to adjust settings on both iOS and Android devices, covering apps like WhatsApp, Find My Friends, and Facebook.

Prototyping & User Testing

Building a Safe and Effective Tool

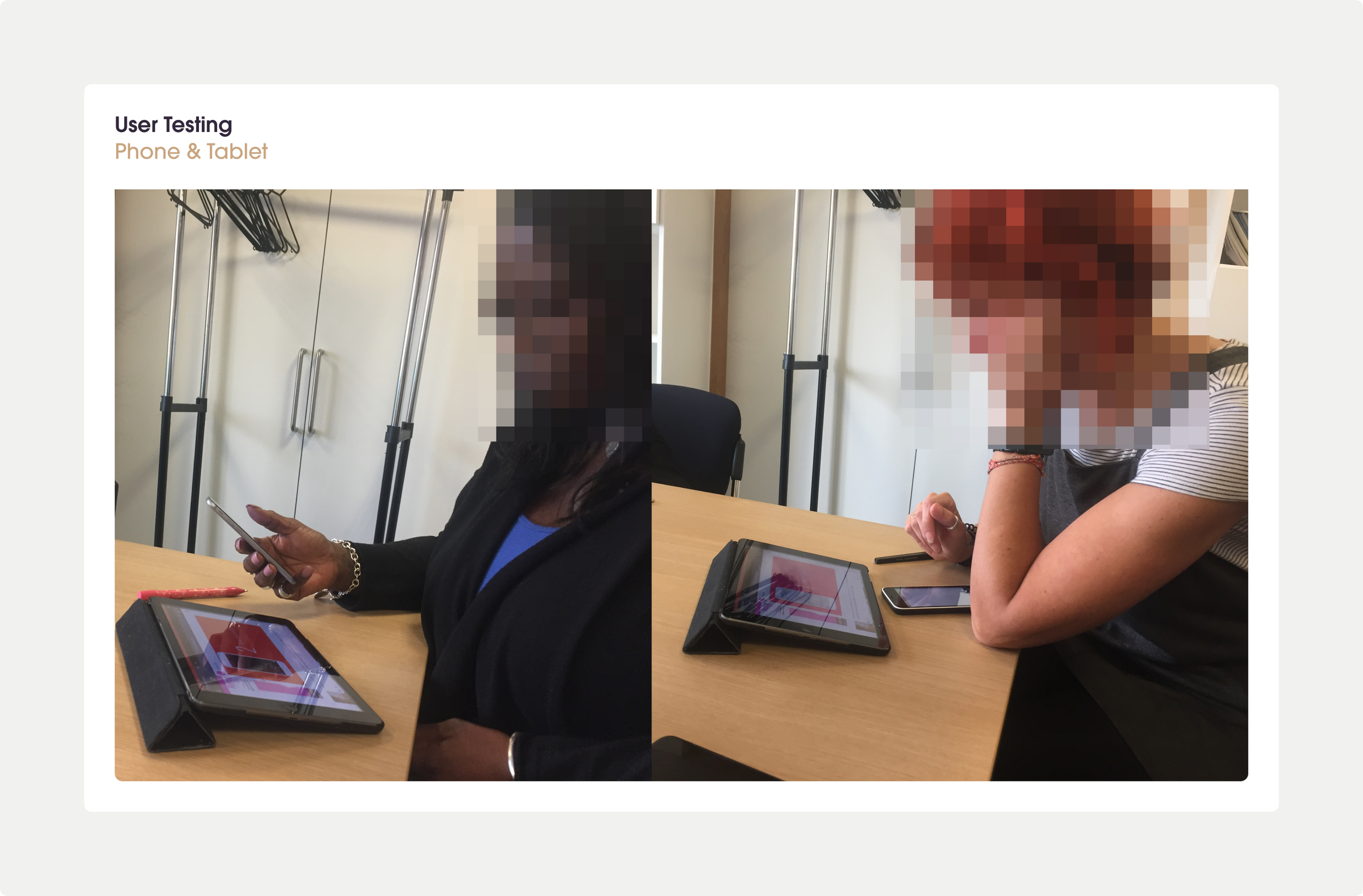

I developed a prototype chatbot using open-source software, which was tested in person with survivors and NGO staff on their personal mobile devices. Feedback from the user testing phase led to several iterations. For example, we found that the videos needed to be slowed down, instructions simplified, and visuals improved, ensuring each step was easy to follow.

Users offered direct feedback during testing:

“Can I just say something about this? I have to keep pausing it to read what it was saying. I mean and that’s fine as long as the person using it is comfortable with pausing but that might be something that could be fixable by making each step stay longer on the screen.”

“Only thing I’d say, you know in the video, the small little circle — you can’t actually see it that clearly.”

Implementation & Continuous Improvement

A Lasting Solution

Once the chatbot was refined, I worked closely with the engineering team at Refuge to ensure a smooth handover. The chatbot has now been live for four years, translated into four languages, and continues to support victims in managing their digital privacy and security settings effectively.